From an idea to an ML paper in under 1 hour

or, vibe-researching using Gemini-CLI to find logical inconsistencies in text

This project is open-source on Github

A few days ago I casually asked on our Lossfunk slack how to detect logical inconsistencies in a set of documents.

My motivation to do this was Donald Trump, and how he wavers on everything. In fact, his behavior is now a meme called TACO.

I was curious if we can detect instances of this via his speeches!

My first impulse was to do the project myself. But, then I reminded myself that my mindset is still somewhat stuck in the past. I need to be lazy and see how far I can go without writing a single line of code.

At Lossfunk, we’re also experimenting with using AI to accelerate AI research! So it was also an excuse to build my initial intuitions to how far current LLMs can go towards automated research.

So, I decided to use newly released Gemini CLI to do vibe-research: implement this project, run experiments, with an end goal of producing a technical report (with citations included).

I was amazed by the outcome, and this post documents my journey and lessons along the way!

Fleshing initial idea via Claude

The first thing that I always do with my ideas is to help flesh them out via Claude. I instruct it to be my brainstorming partner and help write a detailed markdown plan for me (or a coding agent) to follow.

My research hypothesis was to create an inconsistency vector by embedding an artificial set of logically inconsistent statements like (“I love pizza” and “I hate pizza”), and finding average difference between embeddings of such pairs. When you apply such inconsistency vector to embeddings of sentences in a new dataset, all you had to do is to find most similar sentences to such transformed sentence embeddings. This should surely work, right?

I gave Claude a very detailed but high level plan on the project:

help me brainstrom the following project, with an ultimate aim of writing instructions for a separate ai coding agent.

given a set of document (say speeches), i want to detect logically inconsistent statements. My plan is the following and i will mention where I need help:

- Download a popular dataset (say speeches, but give me suggestions)

- Make a list of logical inconsistent statements (i have a few like "Tom likes Pizza" and "Tom hates Pizza", and "A is a mother of B" and "A is a father of B".. expand and refine this list to 10 pairs)

- Embed these using an embedding model (say openai's embedding model?)

- Take difference in embeddings of these pairs and averaging them out to find a logical inconsistent vector?

- Embed the whole dataset, sentence by sentence

- Go through each sentence's vector, and add the computed logical inconsistent vector to it

- Find most similar sentences to the new vector, and put a threshold to select the ones that are highly similar, and those would be candidates for logical inconsistent

- Make these candidate pairs, and use LLM-as-judge (gpt4o-mini) to find whether they are truly logically inconsistent

what do you think? help me refine this plan. i will finally feed this plan into a coding agent to help implement

a good outcome of this would be a technical post, a great outcome will be an actual paper that I can submit to a conferenceClaude inevitably ends up giving code directly. So it’s important to:

Tell it that you don’t want code

Focus on a scoped down experiment first, with more experiments mentioned later

Use a simple dataset first

My intention is to get water flowing through the pipes first, and then expand scope incrementally.

i don't want code, i just want task.md file in codeblocks with high level but detailed instructions

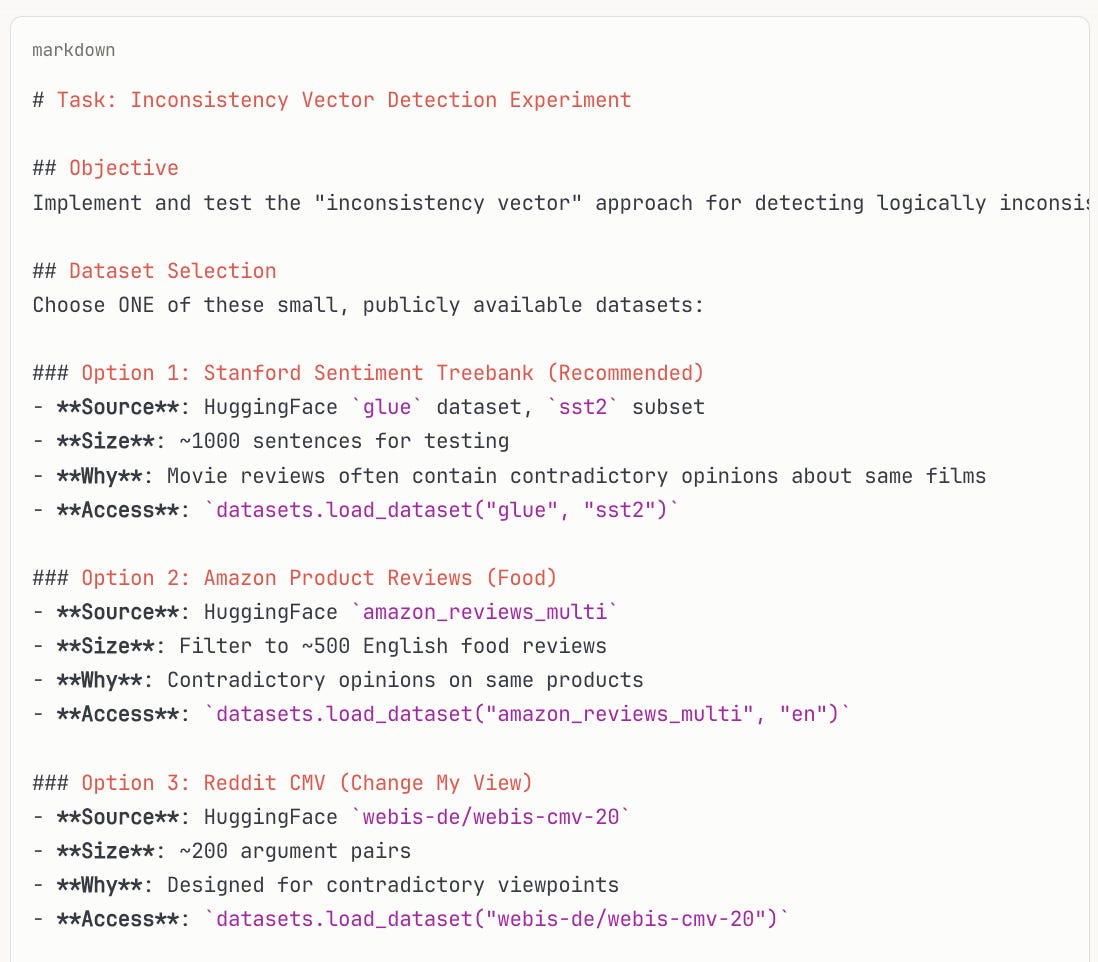

also on a dataset, can we start with some popular dataset that's small (maybe it's available on huggingface or other popular locations).Claude’s final output:

Converting task.md to first experiment

Because I was simulating a fully automated pipeline, I didn’t see or edit the final output. I just copy-pasted it into a task.md into a folder.

Then, I downloaded Gemini CLI, and typed the following into Gemini CLI tool.

implement project as detailed in task.mdThat’s it! After pressing enter, the agent:

Made requirements.txt

Installed libraries

Wrote code

Ran the analysis

Summarised results into a markdown file

It was pretty impressive! But the results were a letdown. The inconsistency vector method didn’t work, it just found similar sentences.

(The last section investigates why this didn’t work! I did debugging later on)

Gemini also created an artificial dataset to reverify its approach and got 0% precision and 0% recall.

Auto-suggested 2nd experiment: clustering and then LLM-as-a-judge

As soon as I got dismal results, Gemini (to my surprise) suggested a 2nd experiment that I could do: cluster similar sentences into categories (using K-means), and then use LLM-as-judge to find inconsistent pairs within that cluster.

Its rationale was that we’re more likely to find inconsistent pairs amongst sentences on the same topic (like eating, or timing) so it makes sense to cluster them.

So, I asked it to update task.md with previous run and then add new experiment as the next step. I do this to help ensure context remains small and manageable.

I set my OPENROUTER_API_KEY and then re-ran gemini cli with updated task.md.

This time, it worked! It detected logically inconsistent pairs in a movie review dataset!

Let’s run this on Trump’s speeches and generate a technical report!

I then asked gemini to take any text file as an input and run the script on it. I found a Trump speeches dataset online and ran the script with it. (Maximum evaluations I set to 200 and maximum pairs to be found as 10)

Lo, and behold! It found inconsistencies in what Trump said (surprise! surprise!)

I then asked Gemini to write a latex report on the whole project with citations.

plan to write a technical report or paper on this topic, citing relevant background literature, first make a plan and then output in latex that i can upload on overleaf to get a pdf. see task.md, code,

results folder to see what to include and how to present findings.Happily, Gemini will search on Google for citations!

Finally it produced a latex file. When I compiled it on overleaf, it sort of rendered! But I had to do a lot of to-and-fro with gemini to get the final correct rendered one without any errors. (I noticed it remain stuck in edit loop, when easier would have been to just replace the whole report!)

But, now I do have a big, beautiful report on my project!

TLDR

Gemini helped me finish a (mini) research project in under an hour and produced a technical report that you can see here: https://github.com/paraschopra/logical-inconsistency-detector/blob/main/Logical_inconsistency_report.pdf

My observations about Gemini CLI (somewhat generalizable to other agents):

(Good) If success criteria is mentioned upfront, and it doesn’t meet it, it would specify alternative approaches

(Good) It would do online searches for grounding information

(Bad) It doesn’t auto-commit changes, so you can revert easily (maybe i missed it, but this seems like an easy win!)

(Bad) If it is stuck in loops, no meta-awareness to try a different approach (e.g. rewrite the whole file instead of line by line edit)

That’s it! I come away mighty impressed!

PS: Why inconsistency vector approach didn’t work

I debugged later, and found that the dataset I was using had lots of similar sentences with just few changes here and there. For example, dataset contained these two sentences:

Sentence 1: ", but it also does the absolute last thing we need hollywood doing to us : it preaches .'"

Sentence 2: "'but it also does the absolute last thing we need hollywood doing to us'"So when inconsistency vector is added to one sentence, it ends up being close to another very similar sentence.

I tried limiting similarity to <0.9 but >0.7, and re-ran on trump speeches. But even that didn’t work! The original method kept on finding similar sentences:

- Score: 0.8976 | Original: 'Thank you everybody. Thank you. Thank you.' | Candidate: 'Thank you very much everybody. Thank you.'

- Score: 0.8921 | Original: 'We're going to do the wall and by the way, who's going to pay for the wall?' | Candidate: 'I DON'T HEAR YOU. WHO IS GOING TO PAY FOR THE WALL?'So, either the sentence embedding model we used isn’t great, or high dimensional spaces are weird enough to not be understood fully in 1 hour! ¯\_(ツ)_/¯

The author, Paras Chopra, is founder and researcher at Lossfunk.

PS: Lossfunk is an independent research lab. So if you want to collaborate with us, check out lossfunk.com

interesting approach! my concerns are about the final results though - it doesn't capture the context in which things are said and WHEN things are said (for e.g. poll over 30% vs poll over 48% - where they said at the same time, if not how far apart?), these additional features would contribute to actually figuring out the inconsistencies (for e.g. the first example of $6mil USD coming through). It'd be great if we are able to add capturing these additional things in the aforementioned pipeline

I wonder what happens when people do this for Biotech/ QC/ Medicine, domains that are superspecialized and hard to debunk.